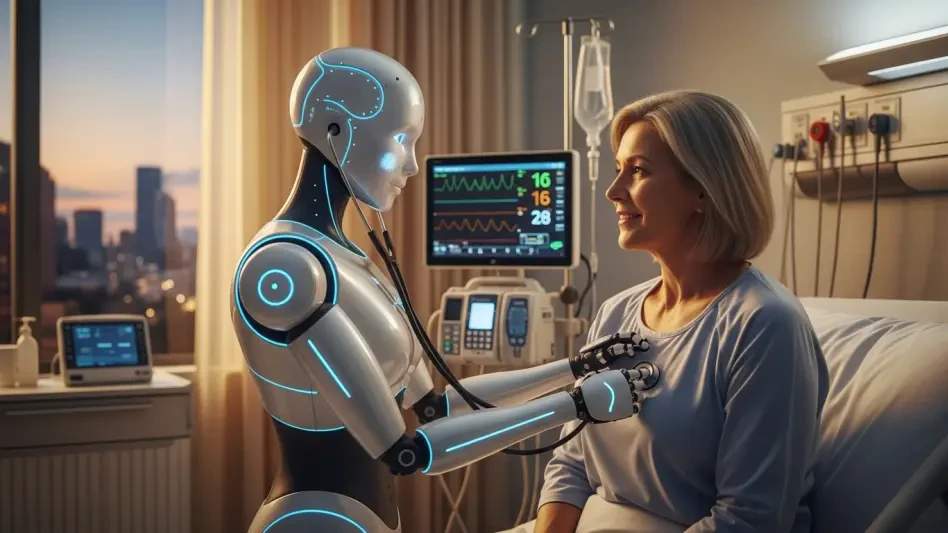

In the rapidly evolving world of healthcare, artificial intelligence (AI) stands as a transformative force, with over 1,200 AI-powered medical devices already authorized for use in clinical settings, underscoring a pivotal moment where technology promises to redefine patient care. Yet, it also raises a critical question: how can such powerful tools be integrated without losing the human touch that defines medicine? The answer lies in prioritizing clinician input to ensure that AI systems are not only technologically advanced but also safe, equitable, and aligned with real-world clinical needs. This report delves into the current landscape of AI in healthcare, explores key trends and challenges, and highlights why collaboration with clinicians is essential for fostering trust and maximizing impact.

The Landscape of AI in Healthcare Today

The integration of AI into healthcare has accelerated dramatically in recent years, reshaping how care is delivered across various specialties. From oncology to cardiology and critical care, AI tools are being deployed to enhance diagnostic precision and streamline clinical workflows. Major tech companies, innovative startups, and established healthcare providers are driving this shift, leveraging machine learning and data analytics to process vast amounts of medical data with unprecedented speed and accuracy. The result is a dynamic ecosystem where technology is becoming an integral part of patient management and treatment planning.

Federal momentum has played a significant role in this transformation, with agencies like the Food and Drug Administration (FDA) and the Office of the National Coordinator for Health Information Technology (ONC) providing oversight and guidance. Their efforts focus on ensuring that AI tools meet stringent safety and efficacy standards while promoting interoperability across systems. This regulatory support, combined with the authorization of numerous AI devices, signals a robust commitment to harnessing technology for better health outcomes. Yet, amidst this progress, a crucial element remains: ensuring that these tools resonate with the realities of clinical practice.

The significance of AI in healthcare extends beyond individual patient interactions to broader systemic improvements. By automating routine tasks and providing decision support, AI has the potential to alleviate clinician burnout and optimize resource allocation in overburdened systems. However, the rapid pace of adoption also brings challenges that must be addressed to prevent unintended consequences. Bridging the gap between technological innovation and practical application is paramount for sustainable integration.

Trends and Growth in Healthcare AI

Emerging Technologies and Market Drivers

The healthcare AI sector is witnessing a surge of cutting-edge technologies that promise to redefine diagnostic and treatment paradigms. Advanced diagnostic algorithms are enabling earlier detection of diseases, while predictive analytics help anticipate patient outcomes and optimize care plans. These innovations are fueled by growing expectations from both clinicians and consumers for data-driven, personalized healthcare solutions that prioritize efficiency and effectiveness. The push for such tools is particularly strong in high-stakes fields where precision can mean the difference between life and death.

Market drivers further accelerate this trend, with cost reduction and improved patient outcomes topping the list of priorities for healthcare organizations. The demand for patient-centric solutions has also spurred investment in AI systems that can adapt to individual needs and preferences. As a result, opportunities abound for developers to create tools that not only address clinical challenges but also enhance the overall patient experience. This alignment with value-based care principles is shaping the direction of innovation in the industry.

Additionally, the evolving landscape reflects a broader societal shift toward embracing technology as a core component of health management. Stakeholders are increasingly recognizing the potential of AI to tackle systemic issues such as access disparities and resource shortages. However, realizing this potential hinges on designing systems that are intuitive and relevant to the end users—clinicians who navigate complex care environments daily.

Market Performance and Future Projections

Current data paints a promising picture of healthcare AI, with market size expanding rapidly due to widespread adoption across clinical settings. Studies indicate significant improvements in diagnostic accuracy and workflow efficiency, with some AI tools outperforming traditional methods in detecting conditions like cancer from imaging scans. Adoption rates continue to climb as healthcare providers seek to leverage these technologies to meet rising patient demands and operational challenges. The financial investment in this space reflects confidence in AI’s transformative capabilities.

Looking ahead, projections suggest robust growth for healthcare AI from 2025 to 2027, with market analysts anticipating a surge in both innovation and implementation. Forecasts highlight the potential for AI to revolutionize care delivery, particularly if guided by human-centered principles that prioritize clinician collaboration. The emphasis on measurable outcomes is expected to drive further development of tools that integrate seamlessly into existing systems while delivering tangible benefits to patients and providers alike.

This growth trajectory, however, is not without caveats. Ensuring that AI systems remain aligned with clinical realities will be critical to sustaining momentum. Performance indicators suggest that while the technology holds immense promise, its success depends on continuous refinement and adaptation to meet the nuanced demands of healthcare environments. The future of AI in this sector looks bright, provided stakeholders commit to a balanced approach that values human expertise alongside technological advancement.

Challenges in Implementing AI in Clinical Settings

The road to effective AI adoption in healthcare is fraught with obstacles that can undermine even the most sophisticated systems. Poor usability often tops the list, as many AI tools fail to align with the practical workflows of clinicians, leading to frustration and low uptake. Algorithm aversion, where healthcare providers distrust AI due to perceived errors or lack of transparency, compounds this issue, creating a barrier to acceptance. Trust remains elusive when systems operate as opaque “black boxes,” leaving clinicians skeptical of their recommendations.

Interoperability presents another significant hurdle, as fragmented electronic health record (EHR) systems hinder seamless integration of AI tools. This disconnection can result in inefficiencies, such as duplicate testing or incomplete data sharing, ultimately impacting patient care. Addressing these technical challenges requires a concerted effort to design solutions that can operate across diverse platforms while maintaining data integrity and security. Without such compatibility, the full potential of AI remains out of reach.

Ethical concerns also loom large, with risks like clinician deskilling—where over-reliance on AI erodes professional skills—posing serious implications. Evidence from trials such as the ACCEPT study highlights how prolonged use of AI assistance can diminish expertise in non-AI settings. Additionally, biases in AI systems, often stemming from unrepresentative training data, threaten to exacerbate health disparities. Mitigating these issues demands strategies like transparent design and active clinician collaboration to ensure that technology supports rather than supplants human judgment.

Regulatory Framework and Policy Influence on Healthcare AI

The regulatory environment shaping healthcare AI is a critical factor in its development and deployment, providing both structure and accountability. Agencies such as the FDA, ONC, and Centers for Medicare and Medicaid Services (CMS) have established frameworks to guide innovation while prioritizing patient safety. Initiatives like the Trusted Exchange Framework and Common Agreement (TEFCA) aim to enhance interoperability, ensuring that AI tools can function cohesively within the broader healthcare ecosystem. These policies reflect a commitment to responsible advancement.

A notable policy approach gaining traction is outcomes-based contracting (OBC), which ties funding to measurable clinical improvements. This model incentivizes developers to focus on effectiveness and usability, encouraging clinician involvement throughout the AI lifecycle. By linking financial support to real-world impact, OBC fosters accountability and aligns technological progress with the ultimate goal of better patient outcomes. Such mechanisms are vital for steering the industry toward sustainable solutions.

Continuous post-market surveillance is another cornerstone of regulatory oversight, addressing concerns like model degradation and emerging biases. Monitoring AI performance over time ensures that tools remain safe and equitable as patient populations and clinical practices evolve. This ongoing evaluation, supported by federal guidance, underscores the importance of adaptability in maintaining trust and efficacy. As policies evolve, they must balance innovation with safeguards to prevent unintended harm and promote fairness across diverse communities.

The Future of AI in Healthcare with Clinician Collaboration

Looking forward, the trajectory of healthcare AI points to exciting possibilities, particularly with the advent of real-time decision support tools that can enhance clinical workflows. These systems promise to provide actionable insights at the point of care, empowering clinicians to make informed decisions swiftly. Coupled with emerging fields like generative AI and personalized medicine, the potential to tailor treatments to individual patients is becoming increasingly tangible. Such advancements herald a new era of precision in healthcare delivery.

Market disruptors are also reshaping the landscape, with consumer preferences shifting toward transparent and equitable care models. Patients and providers alike are demanding AI solutions that prioritize clarity and fairness, pushing developers to rethink design approaches. Clinician-driven innovation stands at the forefront of this shift, ensuring that tools are grounded in the realities of medical practice. Adaptive health systems that evolve based on real-world feedback are essential for meeting these evolving expectations.

Global policy alignment, alongside economic and regulatory changes, will further influence AI’s path in healthcare. The urgency to establish safeguards against unregulated deployment remains a pressing concern, as unchecked systems risk undermining patient safety. By fostering collaboration among clinicians, developers, and policymakers, the industry can navigate these complexities and harness AI’s potential responsibly. Economic conditions may shape investment priorities, but the core focus must remain on integrating clinician expertise to drive meaningful progress.

Conclusion and Recommendations for Human-Centered AI

Reflecting on the insights gathered, it becomes evident that clinician input is indispensable in shaping a future where AI enhances rather than disrupts healthcare. The challenges of poor usability, interoperability, and ethical risks underscore the necessity of grounding technology in the lived experiences of medical professionals. This collaboration proves to be the linchpin for ensuring that AI systems are not only innovative but also practical and trustworthy in diverse clinical settings.

Moving forward, stakeholders must commit to actionable steps that embed human-centered design at the core of AI development. Mandating clinician involvement in every phase—from ideation to post-market evaluation—should be a non-negotiable standard, supported by federal policies like outcomes-based contracting to incentivize meaningful outcomes. Establishing robust interoperability standards will further enable seamless integration, reducing inefficiencies and enhancing care coordination across systems.

Beyond these measures, fostering a culture of continuous improvement through regular bias audits and clinician feedback loops will be crucial. Encouraging partnerships among developers, regulators, and healthcare providers can pave the way for adaptive health systems that evolve with emerging evidence. By prioritizing these strategies, the healthcare industry can confidently embrace AI as a powerful ally, ensuring that technology serves humanity with equity, safety, and compassion at its heart.