From break-throughs in scans and scripts to breakthroughs in speech: why AI’s next leap is interpersonal

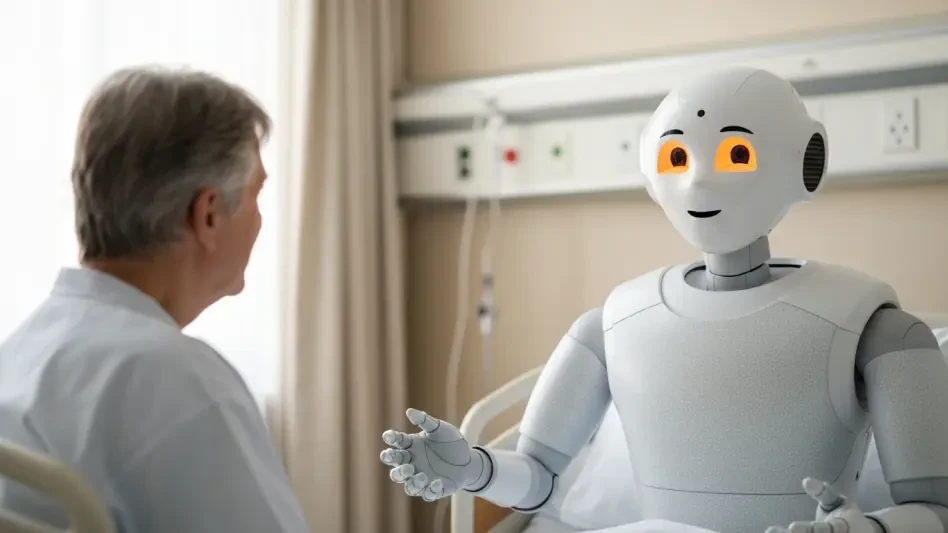

A quiet race is underway to improve what happens in the few minutes between a clinician and a patient when words, tone, and timing decide whether care feels safe, clear, and worth following afterward, and the contenders in this race are not shiny scanners but conversational systems built to teach listening. Industry voices from hospitals, schools of nursing, and digital health agree: the headline AI wins—pathology assist tools like Stanford’s Nuclei.io, drug discovery pairings such as AstraZeneca and Algen Biotechnologies, and ambient notes from Microsoft’s Dragon Copilot—set expectations for precision, yet the next differentiator is interpersonal skill at scale. The stakes feel concrete because empathy, clarity, and listening shape trust and adherence, and a notable share of patients—nearly 60%—say communication quality alone could make them switch providers.

Educators, patient-experience leaders, and simulation directors converge on a shared point: conversational and generative AI now create realistic practice that is hard to replicate with faculty time alone. However, they diverge on emphasis. Some champion AI for its contextual feedback and longitudinal tracking; others stress it as a safe space for repetition. Across viewpoints, the promise remains the same—make “soft” skills coachable and measurable without replacing clinicians, and do so in a way that preserves humanity rather than flattening it.

Turning bedside manner into a trainable discipline

Communication failures are costly; adaptive AI practice makes them visible and fixable

Program directors describe a clear training gap: missed cues, rushed explanations, and weak empathy cost organizations in safety events, readmissions, and churn. Patient advocates echo that poor communication erodes trust, which can derail even perfect clinical plans. In this light, AI-guided practice appears less like a novelty and more like risk management.

Frontline managers who piloted AI simulations report measurable gains—clearer explanations, steadier tone under pressure, and stronger shared decision-making. Some educators, however, caution against overclaiming transfer; they argue that validation must track outcomes in live care, not just simulated scores. Privacy, cultural sensitivity, and bias also surface in the debates, with consensus forming around strict scenario governance and transparent oversight.

Virtual patients that remember: generative models bring realism, variability, and psychological safety

Simulation specialists highlight a leap over scripted role-play: virtual patients carry memory across sessions, vary emotion, and respond to nuance in language. Learners can see a conversation de-escalate when reflective listening lands—or escalate when it does not—mirroring clinic reality. Unlike one-off practice, scenarios can reset, branch, and adapt, reducing performance anxiety while expanding exposure.

Early adopters point to traction in CNA onboarding, medical tech training, and behavioral health. In mental health modules, richer backstories—housing stress, family dynamics, or cognitive decline—make assessments more authentic. Yet faculty warn of risks: content drift if models are left uncurated, overreliance on a “right answer,” and the need for educators to calibrate difficulty. Most agree that unlimited practice and consistent standards outweigh the downsides when oversight is strong.

Scoring empathy without reducing it to a checkbox

Assessment leads praise systems that evaluate context, not just word counts. Tools analyze explanation clarity, reflective listening, tone, turn-taking, and responsiveness, then return narrative feedback rather than a simplistic score. Learners get transcripts with highlighted moments and rationale, which helps them understand why phrasing soothed or confused.

A growing trend is longitudinal coaching. Analytics map progress across rotations, surfacing blind spots and tailoring remediation while maintaining learner safety. Some cultural trainers urge caution: what “good” looks like varies by region and community. The emerging practice is to tune rubrics to local norms, strengthening relevance and avoiding a one-size-fits-all model of empathy.

Where adoption is sticking first—and what that means for scale

Health systems report momentum in high-contact roles and mental health training, where relational competence defines clinical effectiveness. Leaders frame this as a strategic response to staffing constraints: standardized, scalable AI practice shortens time to proficiency while reinforcing patient-first habits.

The operating model many endorse pairs eLearning partners with internal faculty, enabling rapid rollout and consistent quality checks across sites. As models grow more nuanced, teams see higher engagement and better transfer to practice. Executives note a secondary effect: organizations that invest early differentiate on patient experience metrics, shaping reputation and retention.

From insight to implementation: a pragmatic playbook

Implementation veterans recommend starting small and measuring deeply. Pilots with frontline cohorts establish baseline communication, then track narrative and quantitative improvements through scheduled practice blocks. The point, they argue, is to prove behavior change in context—different units, populations, and shift patterns—before expanding.

Most trainers blend AI with human coaching. Faculty debriefs help interpret feedback, cultural sensitivity guardrails prevent harm, and scenario governance maintains relevance. Operational teams fold simulations into onboarding and CME, align them with patient-experience metrics, and use analytics to personalize learning paths. When done well, AI runs in the background, while human mentors guide reflection and growth.

The human core, amplified

Clinicians, educators, and patient advocates align on a final theme: AI is not a surrogate for compassion. It is a scaffold that frees time and reinforces the moments that matter—listening, explaining, empathizing—so bedside manner becomes a practiced discipline, not a personality trait. In a staffing-constrained era, measurable soft skills function as an organizational asset tied to satisfaction, adherence, and safety.

This roundup closed with clear next steps. Teams committed to trials that center high-contact roles, invested in scenario governance to manage bias and privacy, and linked training outcomes to real patient metrics. The most consistent advice suggested building an iterative loop—simulate, measure, coach, and redeploy—so interpersonal skill growth became visible, sustainable, and inseparable from quality care.