As a pioneer in medical technology, Faisal Zain has spent years shaping the future of healthcare through innovative devices for diagnostics and treatment. With a deep understanding of how technology intersects with patient care, Faisal offers unique insights into the transformative potential of artificial intelligence in medicine. In this interview, we explore the evolving role of AI in healthcare, the importance of trust and reliability, its expansion beyond traditional applications, the need for education in this space, and the critical balance between technology and the human touch in medical practice.

How would you describe the current landscape of AI in healthcare, especially in terms of its progress and challenges?

AI in healthcare is at an exciting yet complex stage. It’s already making waves in areas like diagnostics, drug discovery, and even assisting in surgeries with robotic precision. However, its integration into everyday clinical practice is still uneven. The technology is there, but the systems—whether it’s hospitals or insurance frameworks—aren’t always ready to adopt it fully. There’s a gap between the potential of AI and its practical deployment, largely due to issues like trust, accountability, and ensuring that these tools align with medical standards.

What are some of the most innovative ways AI is being applied in healthcare today?

Right now, AI is shining in diagnostics, particularly in radiology, where it helps analyze scans with incredible accuracy. It’s also being used in drug discovery to speed up the process of identifying potential treatments. In surgery, AI-powered robotic systems are enhancing precision, allowing for minimally invasive procedures. Beyond that, we’re seeing early applications in fields like oncology, where AI can predict disease progression, and even in personalized medicine, tailoring treatments to individual patients based on vast data sets. It’s inspiring to see how far we’ve come.

Why do you think trust is such a critical factor in the adoption of AI in healthcare?

Trust is everything because healthcare isn’t just about technology—it’s about people’s lives. Patients and doctors need to feel confident that AI tools are safe, accurate, and fair. If there’s any doubt about how a decision was made or whether personal data is secure, that trust erodes. Issues like algorithmic bias, where AI might unintentionally favor certain groups over others, or lack of transparency in how models work, make people hesitant. Without trust, even the best AI tools won’t be embraced.

What specific hurdles are preventing people from fully trusting AI in medical settings?

There are a few big challenges. First, accountability—who’s responsible if an AI tool makes a wrong call? Is it the developer, the doctor, or the hospital? Then there’s data privacy; patients worry about how their sensitive information is used or protected. Algorithmic bias is another concern, as AI systems can reflect flaws in the data they’re trained on. These issues create a perception that AI might not always act in the best interest of the patient, which is a tough barrier to overcome.

How can healthcare providers and policymakers work together to build confidence in AI tools?

It starts with transparency. Providers need to clearly explain how AI is used, what data it relies on, and how decisions are made. Policymakers can help by setting strict standards for data protection and requiring rigorous testing of AI tools before they’re used in clinical settings. Engaging patients and professionals in the conversation is key—let them voice concerns and be part of the solution. Certification systems or public audits of AI performance could also go a long way in showing that these tools are reliable and safe.

Can you explain what ‘AI assurance’ means in the context of healthcare and why it matters?

AI assurance is about proving that AI systems are not just smart, but dependable in a clinical environment. It means creating frameworks to test and validate that AI decisions align with medical knowledge and deliver consistent, accurate results. This matters because healthcare isn’t a place for guesswork. If an AI tool is used to diagnose or recommend treatment, there needs to be a measurable guarantee of its performance. Assurance shifts the focus from just building better tech to ensuring it’s trustworthy for real-world use.

Why has radiology become the frontrunner in AI adoption within healthcare?

Radiology is a natural fit for AI because it deals with visual data—scans and images—that AI models can analyze effectively. These tools can spot patterns or anomalies in X-rays, MRIs, or CT scans faster and sometimes more accurately than the human eye. It’s also a field where there’s a high volume of data available to train AI systems. That’s why about 80% of healthcare AI applications are in radiology right now. It’s a proving ground for what AI can do, setting the stage for other specialties.

What other medical fields are beginning to see significant AI integration, and how?

Beyond radiology, surgery is picking up steam with AI-driven robotic systems that enhance precision during operations. Oncology is another area, where AI helps predict how cancers might progress or respond to treatments. We’re also seeing it in molecular medicine, aiding in understanding diseases at a cellular level. In these fields, AI isn’t just about doing tasks faster—it’s about improving outcomes through better prediction and tailored approaches, which is incredibly promising.

Why is it so important to teach AI literacy to the next generation of healthcare professionals?

AI literacy is crucial because the future of healthcare will inevitably involve these tools. Young doctors and researchers need to understand how AI works, its limitations, and how to use it as a complement to their expertise, not a crutch. Without this knowledge, there’s a risk of over-reliance or misinterpretation of AI outputs. Educating them ensures they can harness AI’s power while maintaining the critical thinking and curiosity that drive medical innovation.

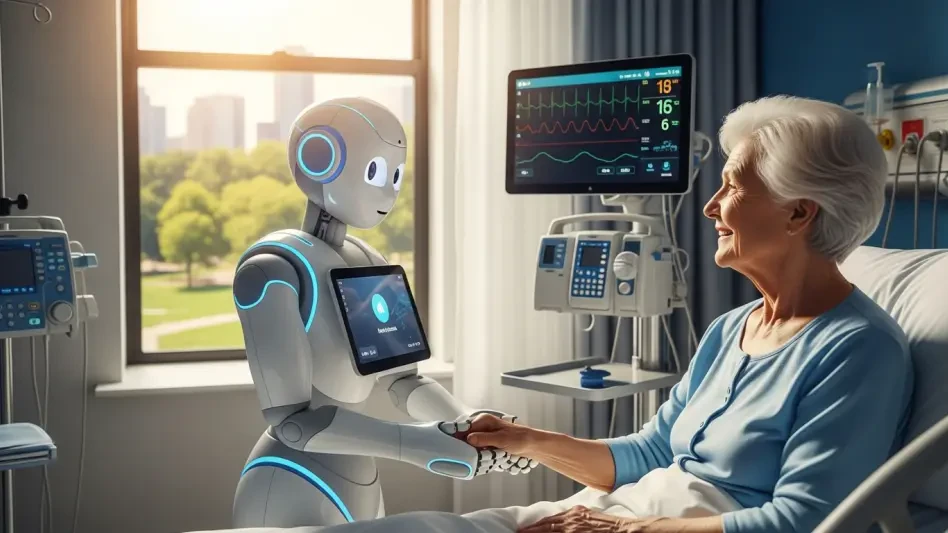

How do you see the balance between AI-driven healthcare and the human element playing out in the future?

AI is a tool, not a replacement for doctors. It can analyze data, suggest options, and handle repetitive tasks, but the human element—empathy, judgment, and the ability to connect with patients—remains irreplaceable. The future lies in empowerment, where AI frees up clinicians to focus on patient care rather than paperwork. The challenge is ensuring that as AI grows, we don’t lose sight of the personal touch that defines medicine. It’s about partnership, not substitution.

What is your forecast for the role of AI in healthcare over the next decade?

I believe AI will become deeply embedded in healthcare over the next ten years, moving beyond niche applications to mainstream use across specialties. We’ll see more personalized medicine, where treatments are tailored using AI insights, and predictive tools will help catch diseases earlier. However, the real game-changer will be in trust and regulation—if we can establish robust frameworks for assurance and privacy, adoption will skyrocket. I’m optimistic that AI will make healthcare more accessible and efficient, but only if we prioritize the human values at its core.